So I’m trying to graduate this year, but simply getting my thesis done isn’t my style. It had to be automatic, or something.

Over the past 5 years I’ve been working on the development of a camera system to measure flow velocity in three dimensions. The nerd-lingo designation would be “3D3C”, that is, three-dimensional measurement domain and three components of velocity. Technically, since the system is instantaneous, that is, you don’t get the third dimension by scanning, I could make my bologna bigger if I said “4D3C” denoting then the fourth dimension as time. However, I like to keep that in my back pocket and only pull it out after serious threats have been made to my skillz.

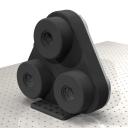

We buy the lenses and the CCD cameras, but the 3D camera itself I designed in Solidworks, and the CAD model is, of course, automatic—that is, I can type in three or four numbers as key dimensions in one part and all the other parts and corresponding screw holes move to the right place. These three or four numbers come from a design program written in Mathematica that I don’t like to talk about because it’s not automatic.

Here are some renderings of how the camera would have looked had the idiots who anodized it not almost completely ruined it.

But enough about the camera. The way it works is, the user sets up his flow of choice in a water tank (or oil tank, or whatever, but usually something around the density of water) and you fill the tank with a carefully eyeballed amount of microscopic reflective beads—in my case, a probably toxic fluorescent powder made by Kodak that doesn’t exist anymore (and there ain’t much left in the jar). Then you shine a bright-ass laser—the same kind used to remove tattoos—expanding the small beam to a cone or cylinder that covers the measurement volume. The laser is nothing but an extremely fancy flash to freeze the motion of the particles in each picture.

The scene is imaged through three lenses at a time, and two such picture “triplets” are taken at a precise time difference from each other, which, after running through the 7-years-in-development software, spits out a vector field of the displacement of the particles from one triplet to the next. And, much like high school physics, the velocity is simply the displacement divided by the time [between the first and second triplet].

To prove this isn’t all just a lie I made up to impress my SWG buddies, my thesis experiment consists of imaging the flow generated by a solid flap—or as I like to say, a microscope slide bolted to an R/C servo. Well, not quite bolted, since there is a belt between the two, but whatever. Here’s what the experiment looks like while it’s running:

The first time I tried it it flapped too fast and the glass broke. Super-#$#%^-sonic, dude.

So what’s so cool about this? Nothing. Does it make the rice cheaper in China? No. It doesn’t cure cancer, either.

What is cool is that, as I mentioned, it’s been automated. Aside from mechanical failures, I can run experiments 24 hours a day, and start them from anywhere in the world with an internet connection. The software that actually records the images from the camera to the computer’s hard drive is run by a Python script which first runs a program which sends trigger pulses to the lasers and camera to make sure they are synchronized, then it activates the servo controller to move the glass slide in a certain way, which sends out a “start” trigger to the laser synchronizing program, records a set number of frames, shakes the slide back and forth to get rid of any bubbles that may accumulate, exports the images it just recorded to a set directory, then waits 10 minutes and goes again—a total of 30 times.

The timing program I wrote specifically to be versatile but also with automation in mind; once it fires the lasers a set number of times it quits. The motor is controlled by custom firmware I wrote for an Atmel AT90USBKEY which is half custom-written servo-controlling software and half ripped off Atmel’s sample software so that it behaves like a COM port. So the flapper can be controlled from Hyperterminal in Windows via an old-school style interface. But I also wrote a program to send commands to the COM port from the command line, so that the Python script can run the motor controller. This program crashed once and I had to physically come in here and unplug-n-plug the USBKEY to reset it, since for some reason Windows had lost the COM port put forth by it. Wish I had an internet-ready unplug-n-plug robot.

The Python script outputs a log to the web [link no longer available], which, along with a web cam that sees nothing but black most of the time (and a few spurts of green and purple while the lasers are actually on), can in theory be used to make sure everything is still okay.

After an experiment is done, I copy all the images to another computer for processing, which takes up to 90 minutes—each experiment is 1,530 images each 25 Mbytes in size, and the acquisition computer’s only got a 100Mbit connection. The images go to whichever of the other computers are not busy already processing and have enough disk space. The processing is also automatic; I use the same processing parameters for all experiments so that also runs off a [separate] Python script. After processing, another script is there ready to put the images in a ZIP file and then delete the uncompressed ones.

In theory it would be possible to connect all the automatic steps into one giant automatic thesis. For example, in the 10 minutes of waiting between cycles, I could already copy the images already exported to the computer which will process them, and start processing them. But that would make me obsolete, and so far, I’ve done 5 or 6 experiments and only 2 are good. The rest failed either because of an unreported recording error (meaning there is an internal error I don’t find out about until I look at the images) or because apparently the timing belt I was using is not meant to be in water for days at a time, because it dissolved-broke.

But the two that worked worked. You can see what years’ worth of work amounts to in rendering science by watching these movies [link no longer available] of legitimate unbuttered data. They are vector fields with color-coded velocity contours. The vectors are just one slice through the midline of the glass slide, since showing the whole volume at once is completely useless. The glass flap itself is not in the movie, but you should be able to tell where it is. I call it unbuttered because I haven’t yet grinded on the fence of ethics to make the data look perfect like everyone does before they wear it as a T-shirt to their favorite class.

Update 2008-05-09: you can go here to see the butered movies. These should be viewed with the red/blue 3D glasses. The one with “vorticity” in the filename shows, in short, the axes of rotation of the water as the flap moves it. If it were a movie of a perfect smoke ring (“vortex ring”), then you would just see a circle (the axis of rotation of the smoke in the smoke ring). The other movie shows, sort of, the path the water is following at any instant.

Anyway, make sure you keep going to the website [link no longer available] to stare at the blackness of the web cam’s perspective: after this week I won’t be doing any more flapping, and will instead do some scientific squirting that doesn’t require automation. Remember to refresh every so often to update the log! How exciting.

What would I do to make this better? For starters, I would have a lamp on a computer-controlled switch so that I could see the flap while the computer was waiting 10 minutes—that way the web cam would actually be useful in trying to check for damage.

By the way, setting up the web cam was easier than spitting at a snail from ground level. I bought a “Microsoft LifeCam”, which seems to me should be called a “NoLifeCam” or “PornCam” (because, really, what else are they for) and wrote a really short Python script using the VideoCapture module. The Javascript to display the web cam images came from some guy who I’ve linked to on the page. Do yourself a favor and stay away from that Firewire #$#$, especially expensive stuff like Apple’s LameSight. It’s not worth the hassle to try to make it work in Windows. Not that the Microsoft PornCam is any better, since you have to install like 100 megs of software to get the damn driver, but at least it’s cheap.

Post a Comment